How microSD Cards Are Built, How They Fail, and How Professionals Manage Them

The Untold Life of a microSD Card: From Silicon Wafer to Secure Erasure

From the outside, a microSD card looks boring. It is a black rectangle with a logo on top and some gold contacts on the back. You plug it in, it stores data, and as long as your photos or firmware or logs show up when you need them, you do not think about it again.

Inside, though, the lifecycle of that card is far more complicated. It begins on a mirror-polished silicon wafer, passes through a kind of semiconductor acupuncture ritual, goes through secretive factory software that “marries” the memory with its controller, and then spends the rest of its life slowly leaking electrical charge while you expect it to act like permanent storage. Sometimes it works. Sometimes it fails in the field. And sometimes it quietly forgets what you asked it to remember.

If you build products that depend on microSD cards—embedded systems, data loggers, cameras, industrial controllers, point-of-sale terminals—understanding that lifecycle is not a fun piece of trivia. It is the difference between a stable deployment and mysterious support calls six months after launch.

Where a microSD Card Really Begins

The story of a microSD card does not start in a retail box. It starts in a fabrication plant, usually owned by a NAND supplier such as Samsung, Micron, Hynix, or Toshiba/Kioxia. These facilities are some of the most controlled environments on earth. Airflow, temperature, and airborne particles are monitored more carefully than in most hospital operating rooms.

On a production line that costs billions of dollars to build, wafers are gradually constructed. Layer after layer of material is deposited, patterned with light, etched away, and doped with impurities. This is where the memory cells that eventually become your “32 GB” or “512 GB” microSD cards are physically defined. At this stage, nothing looks like a card. Everything looks like repeated patterns of tiny rectangles on a circular wafer of polished silicon.

Once the circuits are built, there is an obvious question: how much of this wafer is actually usable? That is where wafer probing comes in.

Wafer Probing: Semiconductor Acupuncture on an Industrial Scale

The testing step at the wafer level is performed by probe stations—large, precise machines that lower arrays of microscopic needles onto the surface of the wafer. If you have ever joked that chip testing looks like acupuncture, you were closer to the truth than you might think. It genuinely does look like a bed of ultra-fine needles lightly tapping the surface of the wafer over and over again.

The probe card inside these stations can have hundreds or even thousands of contact points. For NAND, multiple dies are often tested in parallel, which means the tool might be touching down with what amounts to a forest of tungsten needles. Each contact point aligns with a tiny pad on a memory die, and the test equipment sends patterns in, reads responses back, and characterizes how that piece of silicon behaves.

At this stage, several critical measurements are made:

- Speed: How fast can the die read and write?

- Error rates: How often do bits flip, and how easily can they be corrected?

- Bad blocks: Which physical regions are simply unusable?

- Retention behavior: How well does the cell structure hold charge under stress?

The results of this probing determine how the dies are binned. High-performing dies may be routed into higher-grade products. Marginal dies might become lower-capacity or lower-endurance products. Dies with too many problems are discarded. This initial sorting strongly influences how the eventual microSD card will behave years later, in some device you deploy somewhere in the field.

Downsizing, Binning, and Why Capacity Does Not Equal Quality

There is a persistent myth that small-capacity cards are somehow “the leftovers” and that huge cards must be built from better wafers. It sounds logical: if only half of a 32 GB region is good, maybe you downsize it to 16 GB and salvage the best half. That kind of thing does happen, but it is not the whole picture and it does not map cleanly to “small equals bad, big equals good.”

In reality, older, lower-density NAND—4 GB, 8 GB, 16 GB products from earlier process generations—often has better endurance and retention than modern high-density TLC memory or QLC devices. Larger cells, fewer bits per cell, and more generous voltage margins mean those older chips can hold onto data much longer and tolerate more program/erase cycles. Meanwhile, modern 256 GB or 512 GB cards are built on extremely dense nodes, with many bits stored per cell and a heavy reliance on error correction to keep everything in line.

So if you are comparing endurance and data retention, a smaller, older card can actually be the more trustworthy piece of media. The rule of thumb is not “bigger is better” but rather “newer and denser usually trades endurance for capacity and cost efficiency.” That tradeoff is fine when cards are used frequently and refreshed often, but it becomes a problem if you expect a modern high-capacity card to behave like long-term archival storage.

From Die to Card: MPTools and the Marriage of Memory and Controller

After wafer probing and dicing, the usable dies are packaged and paired with a controller. This is where the microSD card begins to look like the product you recognize: a thin substrate with a single molded package that combines both NAND and controller in a compact footprint.

But even at this stage, the card is not ready to ship. The raw controller does not know how the NAND is structured, which blocks are bad, or how best to spread wear across the available space. That knowledge is programmed in using factory software generally known as MPTools—Mass Production Tools.

These tools are not consumer utilities. They are locked, proprietary, and specific to each controller vendor. Inside a production line, MPTools perform several critical jobs:

- NAND characterization: They scan the flash, detect bad blocks, and measure response characteristics.

- Firmware loading: They write the controller firmware that will manage wear leveling, error correction, caching, and power-loss handling for the life of the card.

- FTL construction: They build the Flash Translation Layer (FTL), the mapping system that translates logical block addresses from the host into physical locations on the NAND.

- CID programming: They assign the permanent Card Identification (CID) value, a unique hardware identity that cannot be erased or easily modified later.

- Capacity setting: They decide how much of the physical NAND is usable and how much is reserved as spare area, then set the reported capacity accordingly.

That CID value becomes important later. For now, the key point is that MPTools are the “marriage ceremony” between the controller and the NAND. After this step, the card boots up as a recognizable storage device. Before it, the card is just parts.

Factory Testing of the Finished Card

Once the controller knows its NAND and the FTL is established, the finished microSD card goes through another wave of testing. This time the focus is not raw silicon behavior but system-level behavior: does it respond correctly to host commands, does it sustain the advertised speed class, does it handle standard file system formatting, and does it remain stable under stress?

Manufacturers will run patterns across the card, check performance metrics, confirm that the capacity is correct, and verify that error correction is functioning as expected. Higher-grade industrial cards may also be tested across wider temperature ranges and under more aggressive stress conditions. By the time the card leaves the factory, it has been poked, prodded, and validated multiple times at multiple levels.

None of that guarantees a perfect experience in the field. It just means the card met its intended design envelope on day one.

Why Companies Care About the CID During Data Loading

That permanent CID value programmed during MPTool initialization is not just a manufacturing artifact. Many companies make deliberate use of it during their own production and deployment process.

For some, the CID is an authentication anchor. A device might only fully unlock if a card with an expected CID pattern is present. That can be used for license enforcement, anti-cloning, or simple configuration control. Others use the CID for traceability: the CID is logged alongside the firmware version, device serial number, and batch identifier so that any field failure can be traced back to the exact media that was shipped with that unit.

In high-volume deployments, that level of traceability matters. If a batch of cards with a specific CID range begins to fail, you want to know exactly which customers might be affected and what image was flashed at the time. That is difficult to do if you treat microSD cards as anonymous commodities. It is much easier if you treat each card as a uniquely identified component.

How Data Actually Gets Loaded: Factory versus In-House Duplication

Once you have cards and you have an image—an OS build, a firmware package, a set of application data—the next step is loading that content. Broadly speaking, there are two paths: factory loading and in-house duplication.

Factory loading means sending your content to the flash supplier and asking them to integrate it into their MPTool process. Technically, it works. Practically, it means your proprietary image leaves your network, crosses borders, and ends up in the hands of a manufacturing ecosystem that you do not control. For some companies, that is fine. For others, especially in medical, automotive, defense, or any security-sensitive industry, that is a non-starter.

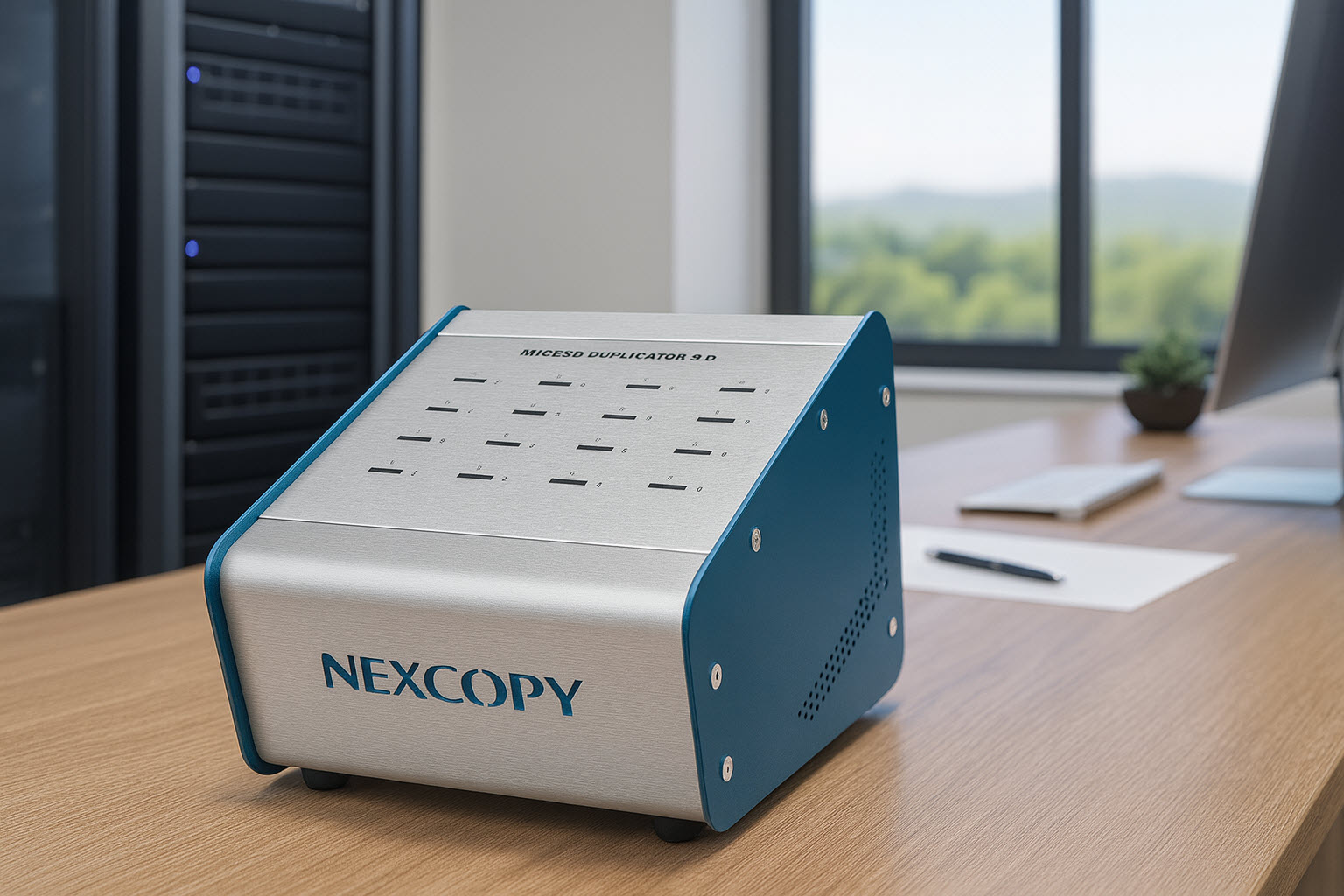

The alternative is to keep content loading in-house. That is where dedicated duplication systems come in. This company, for example, builds microSD duplication systems specifically designed for controlled, repeatable, high-volume in-house loading. Their PC-based microSD160PC model can take a known-good IMG file, clone it sector-by-sector to multiple cards at once, read and log each card’s CID value, and perform secure erasure using multi-pass overwrite methods. The companion mSD115SA standalone unit offers similar performance with a much simpler, one-button workflow and no PC required.

In both cases, the key concept is control. The content never leaves your facility. You decide when images are updated, how verification is done, and which CID values are paired with which firmware builds. Instead of hoping that a vendor on the other side of the world got it right, you know exactly what was written to each card and when.

How microSD Cards Lose Data Even Sitting on a Shelf

One of the more surprising realities of modern NAND is that it does not behave like archival storage. You might assume that if you write data to a card, put it in a vault, and never touch it again, it should be safe for decades. That assumption works for certain optical formats and some tape technologies. It does not hold for modern TLC and QLC microSD cards.

The core problem is that NAND stores data as charge in tiny structures inside the silicon. Those structures are not perfect insulators. Over time, charge leaks away. The smaller the process node and the more bits stored per cell, the smaller the margin for error. Older SLC and early MLC devices could hold data for many years. Modern high-density NAND often has a practical retention window measured in a handful of years at room temperature and less at elevated temperature.

Temperature has a large effect. A card stored at 15 degrees Celsius in a cool room will retain data longer than a card stored in a warm warehouse or in the back of a vehicle. Every increase in temperature shortens the retention window. Meanwhile, cards that have already seen heavy program/erase cycling will have less headroom than cards written once and stored immediately.

All of this adds up to an uncomfortable conclusion: a microSD card is not a time capsule. Whatever you store on it will not remain rock solid for twenty-five years. Expecting it to behave like permanent storage is a category error. It is better to think of it as a working medium that happens to be removable, not as an archival medium that will last a human lifetime.

What Actually Causes Corruption in the Field

Field corruption rarely happens because of a single grand event. More often it is a combination of small, predictable stresses that add up over time. Power loss during writes is one of the most common triggers. If a device is writing to the card and power is cut at the wrong moment—a battery disconnects, a power cable is jostled, someone pulls the card mid-write—the file system can be left in an inconsistent state. Some controllers handle this gracefully. Others do not.

Another common cause is the quality of the controller itself. A microSD card is not just a piece of memory; it is a miniature embedded system. Cheap controllers use weaker error correction, minimal wear leveling, and simpler firmware logic. They may work fine at first and then begin to misbehave as wear accumulates. Files go missing, partitions become corrupted, the card suddenly reports as read-only, or it refuses to initialize.

The quality of the NAND matters as well. There is a difference between tier-one NAND from reputable suppliers and the mixed, reclaimed, or heavily down-binned NAND you sometimes find in generic or counterfeit cards. The latter can work for simple, non-critical tasks, but they are poor candidates for anything you expect to run reliably for years.

Add heat, vibration, humidity, and usage patterns that push cards close to their endurance limits, and you have a recipe for the kind of intermittent, hard-to-reproduce issues that marketing departments hate and support departments live with.

Keeping microSD Cards Reliable Over the Long Term

Given all this, how do professionals keep microSD-based systems reliable? They do not rely on hope. They use a set of practical strategies that acknowledge the physics and the economics involved.

First, they start with a known-good master image. That means building a reference system, validating it thoroughly, capturing an IMG file from that system, and then using that image as the source for all duplication. They do not assemble field units by manually installing operating systems and copying files one at a time. They image from a clean master. That master is tested and archived, and any future changes are deliberate.

Second, they choose media carefully. That usually means avoiding undocumented bargain cards entirely, selecting reputable brands, and specifying industrial or high-endurance product lines when the application justifies it. The cost difference between an industrial card and a generic budget card feels significant on a purchasing spreadsheet. It feels trivial compared to the cost of a field failure at the wrong customer.

Third, they implement refresh cycles. A refresh is a simple concept: read all the data and rewrite it. That process replenishes the charge stored in the cells and resets retention. In some environments a yearly refresh is enough. In hotter environments or with extremely dense NAND, refreshes may happen more frequently. The important part is that someone acknowledges that data retention has a clock and puts a maintenance plan in place instead of assuming the clock does not exist.

Fourth, they use write protection where possible. Once data is loaded and verified and does not need to change, locking the card into a read-only mode eliminates one of the main sources of corruption: unplanned writes. There are software-level approaches and hardware-level approaches to doing this, but the principle is the same. If bits do not need to move, do not let them move.

Fifth, they log CIDs and other metadata during duplication. A system that reads the CID from each card, pairs it with the image version and date of duplication, and records that information in a log creates traceability. If something goes wrong in the field, you can look back and see exactly which card was in that system, which image was used, and whether that card was part of a wider batch that is starting to misbehave.

Finally, they use proper duplication tools. A system like this company’s PC-based microSD160PC unit or the mSD115SA standalone duplicator does more than copy files. It creates sector-based clones, verifies them, reads CIDs, supports secure erase when cards are reused, and allows an entire production run of cards to be built from the same trusted source image.

Repair, Recovery, and Knowing When to Let Go

When a card fails despite all precautions, the question becomes: can it be repaired, and is it worth repairing? The honest answer is that it depends less on the card and more on the value of the data.

If the failure is purely at the file-system level—corrupted directory structures, damaged partitions, miswritten metadata—then conventional data-recovery tools may be enough. They can scan the card for recognizable patterns, rebuild file tables, and recover usable data. When that works, it is a relief, but it does not change the fact that something undermined the card’s stability in the first place.

If the controller itself is failing, things get harder. MicroSD cards are monolithic. You cannot simply unsolder the controller and replace it. When the controller firmware is corrupt, the internal mapping tables are lost, or the controller cannot reliably talk to the NAND, the card is usually done. Forensic labs can sometimes perform chip-off recovery by physically exposing the NAND die, wiring into it, extracting raw dumps, and reconstructing the logical layout, but that is specialized work. It is expensive, time-consuming, and justified only when the data is irreplaceable—something on the level of cryptographic keys, critical legal evidence, or a large amount of unbacked financial information.

For most use cases, the right answer is simpler: if a card is unstable, replace it. Try to recover what you can, learn what you can, and then take it out of circulation. A card that has failed once in a non-trivial way is not a card you want to trust again in a critical deployment.

Secure Erasure and the End of the microSD Lifecycle

The last chapter in the life of a microSD card is not about performance or capacity; it is about security and disposal. At some point, you will have cards that you want to retire, recycle, donate, or repurpose. Before any of that, you need to make sure whatever was on them is gone.

Deleting files is not enough. Formatting is not enough. Due to the nature of wear leveling and block remapping, there can be valid data left in areas the file system no longer references. If the data on the card is sensitive, that is not acceptable. You need to overwrite the physical media, not just the file system structures.

That is what multi-pass secure erasure schemes, such as DoD 5220.22-M style overwrites, are designed to do. A proper DoD-style erase writes known patterns across every addressable sector multiple times—often a pass of zeros, a pass of ones, and one or more passes of random data—followed by verification. When such a process is implemented correctly, typical recovery tools cannot reconstruct readable information from the card. It is functionally wiped.

Duplication systems that include secure erase functions make this practical at scale. Rather than relying on ad hoc software utilities and manual procedures, you insert the cards into a dedicated appliance, select the erase mode, and let the system handle the heavy lifting. Once the erase and verification passes are complete, the cards can be either reused, passed on, or physically destroyed depending on policy.

Physical destruction sits at the very end of the lifecycle. For extremely sensitive environments, organizations will sometimes shred or crush cards after erasing them simply to eliminate any remaining uncertainty. With a monolithic card, destruction is straightforward: once the silicon is fractured, there is no practical way to read it again.

Closing the Loop: From Fab to Field and Back Again

When you step back and look at the full arc, the lifecycle of a microSD card is not a simple line from “new” to “old.” It is a loop that passes through multiple distinct phases: fabrication, probing, packaging, MPTools initialization, factory testing, in-house duplication, field deployment, maintenance and refresh, eventual failure, secure erasure, and disposal.

At each step, decisions made by different actors shape how long that card will perform and how safely it will handle the data entrusted to it. The semiconductor manufacturer makes choices about process nodes and binning. The controller vendor makes choices about firmware design and error correction. The card assembler makes choices about quality testing. And you, as the system builder or integrator, make choices about the media you specify, the duplication tools you use, the refresh policies you implement, and the way you retire and replace cards.

It is easy to treat microSD cards as a commodity, as something you buy in bulk and forget about. In reality, they are one of the more complex, failure-sensitive components in many modern systems. Treat them with the same seriousness you would treat power supplies, firmware updates, or network security, and they will generally behave well. Treat them as throwaway media and you are more likely to meet them again in the form of support tickets.

For organizations that want to stay in control of that lifecycle, in-house microSD duplication and secure erase capabilities are not luxuries. They are part of a basic hygiene kit. A product line like this company’s microSD duplicator range, with both PC-based and standalone systems, is one way to put that control into practice: verified imaging on the way in, secure erasure on the way out, and transparency in between.

The technology behind microSD is complicated. The decisions you make around it do not have to be. Understand the lifecycle, respect the physics, and use the right tools, and those tiny black cards stop being mysterious sources of risk and start behaving like the predictable components you always hoped they were.