Inside an AI Computer: Why Modern AI Systems Consume So Much Memory

What an AI Server Really Looks Like When You Open the Lid

There’s a lot of noise right now about AI using “too much memory.” Prices are up. Supplies are tight. Everyone says demand is exploding. You’ve probably read that already.

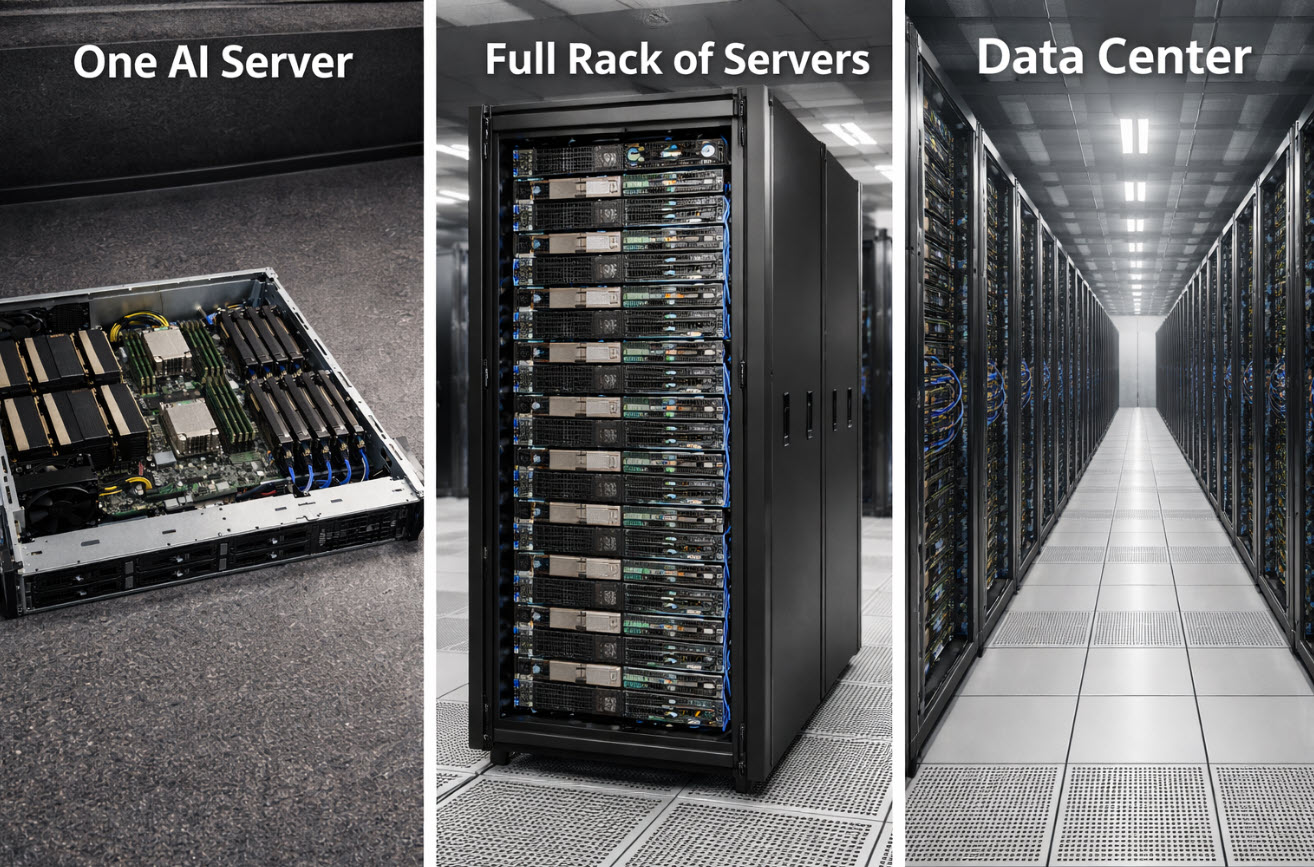

But most of what’s written skips the most important part: what an AI computer physically looks like, and why it needs so much memory in the first place. Not in abstract charts or market forecasts, but in terms you can picture. Once you understand what one AI system actually consumes, the rest of the story stops sounding dramatic and starts sounding inevitable.

I ended up explaining this recently in a place that has nothing to do with data centers. I was at my kid’s school for a “parent day,” standing in a classroom, and a few students started asking about AI. Not chatbot questions. Real questions. What does the computer look like? Where does the data go? Why does everyone keep saying “memory” like it’s the whole game?

So I started small. I asked them to imagine a normal computer. One you’d use at home. It has a processor that does math, memory that holds what it’s working on right now, and storage that keeps things for later. Homework files. Photos. Games. Everything has a place.

Then I told them AI is still a computer, but it’s not doing one small task at a time. It’s running huge amounts of math across huge amounts of data, over and over, comparing patterns, adjusting weights, saving checkpoints, and doing it fast enough that waiting on storage becomes the enemy.

That’s why memory becomes the star of the show. Not because it’s trendy. Because without enough memory and fast storage, the GPUs sit around waiting, and that’s like buying a race car and leaving it parked because the road is too narrow.

An AI server isn’t a single desktop tower. It’s closer to a tightly packed workstation on steroids. A common training server today has eight GPUs, and each GPU has its own ultra-fast memory just to keep up with the math. On top of that, the server has a large pool of system memory to feed the GPUs without bottlenecks, and then a pile of NVMe SSD storage inside the chassis to stage datasets and write out intermediate results.

When you see a real bill-of-materials for one of these machines, it’s not subtle. You’re looking at hundreds of gigabytes of GPU memory, often half a terabyte to multiple terabytes of system RAM, and tens of terabytes of NVMe SSD storage inside a single server. Not across the network. Not in a shared SAN. Inside one box.

That’s usually the moment the room gets quiet, because the scale starts to feel real. One server can have more flash storage than most households will ever own. And AI doesn’t scale by adding one server and calling it a day. It scales by adding dozens or hundreds at a time, because training large models is a team sport. One machine doesn’t carry the whole load.

So if one AI server quietly consumes twenty or thirty terabytes of flash, what happens when you deploy one hundred of them? You’re suddenly talking about petabytes of NAND flash consumed in a single build-out, and that’s before you count shared storage systems, replication, backup, logging, and the “we need it faster than last quarter” upgrades that happen as soon as the cluster is in production.

This is where the memory shortage stories stop sounding like hype and start sounding like basic arithmetic. Memory factories don’t turn on overnight. Flash and DRAM take years to plan, build, qualify, and ramp. Even as headline memory prices fluctuate, the underlying supply constraints don’t disappear when demand arrives in data-center-sized chunks.

And then you get the second wave: rack-scale systems. Instead of treating each server as its own machine, vendors now build entire racks that behave like one computer. Dozens of GPUs in a rack, massive pools of memory, rows of SSDs packed edge-to-edge. Distances measured in inches, not meters, because speed matters that much.

At that point you’re no longer asking, “How much memory does one computer need?” You’re asking, “How much memory does a rack need?” And that’s exactly why consumer electronics feel the squeeze. Not because consumers suddenly started buying twice as many phones, but because a different buyer showed up with a different scale of demand, and they buy in blocks that look like whole data centers.

At the storage level, this also explains why long-standing assumptions about flash behavior are breaking down. When AI workloads dominate demand, distinctions like MLC versus TLC NAND stop being academic and start affecting availability, endurance, and allocation decisions in very real ways.

The kids didn’t need a supply chain lecture to get it. They just needed the picture. One box becomes a row. A row becomes a rack. A rack becomes a room. A room becomes a building. Once you see AI as infrastructure, not magic, the memory story becomes physical, mechanical, and predictable.

And that’s the point. This isn’t a mysterious shortage. It’s a predictable collision between capacity that takes years to build and demand that can appear overnight in the form of a few truckloads of servers headed to a warehouse-sized building.

Put simply: AI isn’t just using more memory than before. It’s redefining what a normal amount of memory even is.

How this article was created

Author bio below — USB Storage Systems & Duplication Specialist

This article was drafted with AI assistance for outlining and phrasing, then reviewed, edited, and finalized by a human author to improve clarity, accuracy, and real-world relevance.

Image disclosure

The image at the top of this article was generated using artificial intelligence for illustrative purposes. It is not a photograph of real environments.

Tags: AI infrastructure, data centers, memory shortage, NAND flash, SSD demand